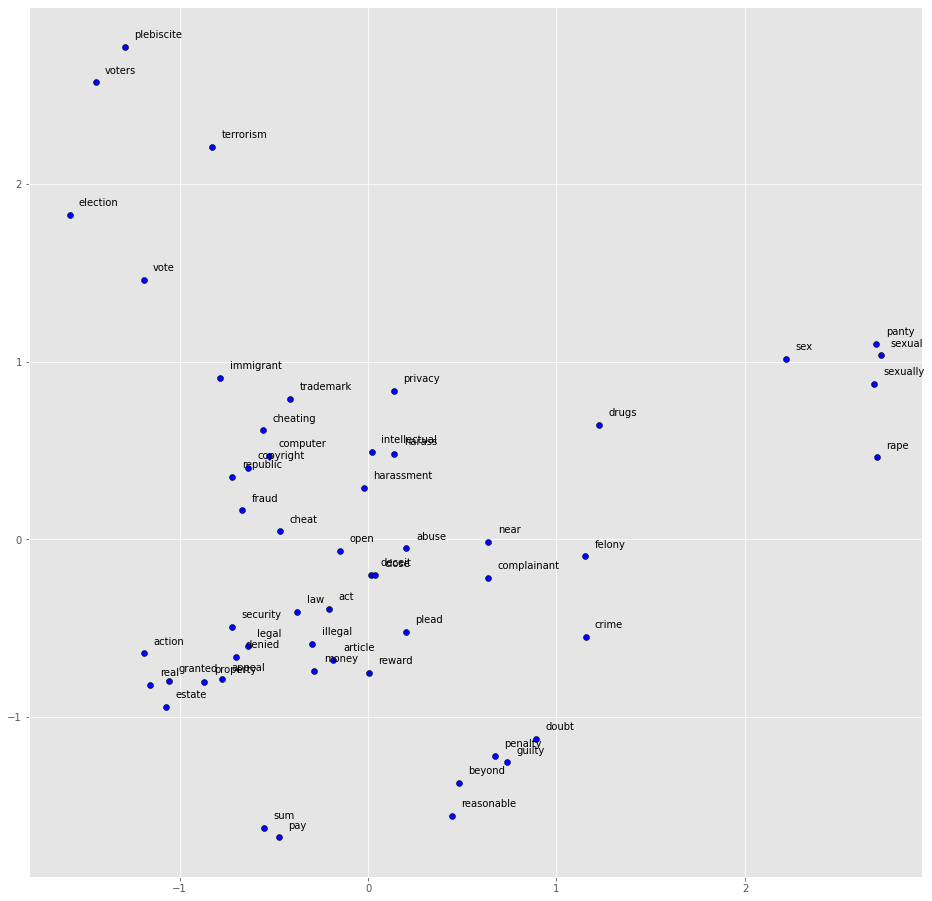

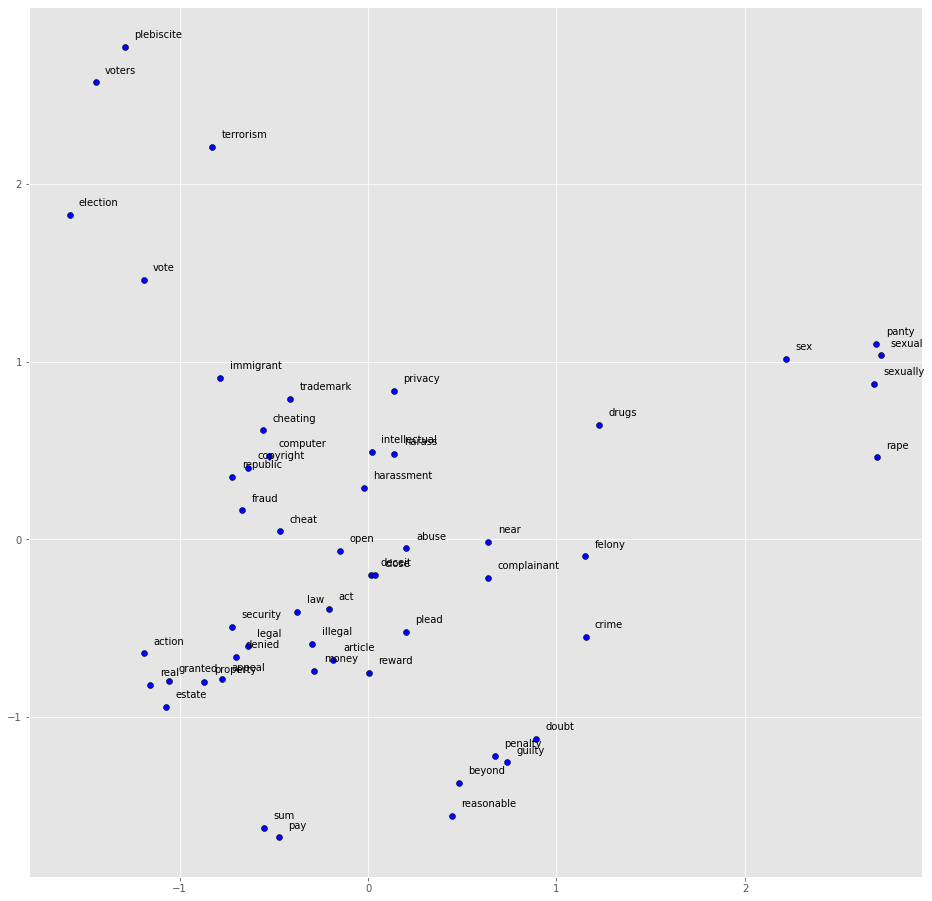

In this research, we trained nine word embedding models on a large corpus containing Philippine Supreme Court decisions, resolutions, and opinions from 1901 through 2020. We evaluated their performance in terms of accuracy on a customized 4,510-question word analogy test set in seven syntactic and semantic categories. Word2vec models fared better on semantic evaluators while fastText models were more impressive on syntactic evaluators. We also compared our word vector models to another trained on a large legal corpus from other countries.

Keywords: gLoVe, word2vec, Word Embeddings, Natural Language Processing

@CONFERENCE{Cordel2021b,

author={Peramo, E. and Cheng, C. and Cordel, M.},

booktitle={2021 International Conference on Artificial Intelligence in Information and Communication (ICAIIC)},

title={{Juris2vec: Building Word Embeddings from Philippine Jurisprudence}},

year={2021},

volume={},

number={},

pages={121-125}

}