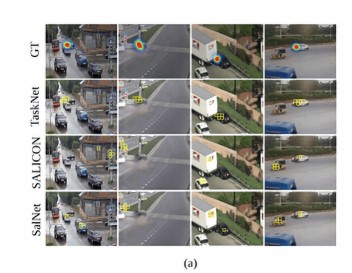

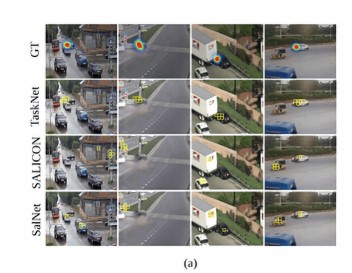

Spotting abnormal or anomalous events using street and road cameras relies heavily on human observers which are subject to fatigue, distractions, and simultaneous attention limit. There are several proposed anomalous event detection systems based on complex computer vision algorithms and deep learning architectures. However, these systems are objective-agnostic, resulting in high false-negative cases in task-driven abnormal event detection. A straightforward solution is to use visual attention models. However, these are based on low-level features integrated with object detectors and scene context, rather than on the observers' object of gaze. In this paper, we explore a task-driven visual attention-based traffic accident detection system. We first examine the human fixations in free-viewing and task-driven goals using our proposed, first task-driven, fixation dataset of traffic incidents from different road cameras called TaskFix. We then used TaskFix to fine-tune the visual attention model, in this work called TaskNet. We evaluated the proposed fine-tuned model with quantitative and qualitative tests and compared it with other visual attention prediction architectures. The results indicate the potential of the visual attention models in abnormal event detection. The dataset is available here: https://bit.ly/TaskFixDataset

Keywords: saliency models, human attention model, anomalous event detection

@article{juan2021investigating,

title={Investigating Visual Attention-based Traffic Accident Detection Model},

author={Juan, Caitlienne Diane C and Bat-og, Jaira Rose A and Wan, Kimberly K and II, Macario O Cordel},

journal={Philippine Journal of Science},

volume={150},

number={2},

pages={515--525},

year={2021}

}