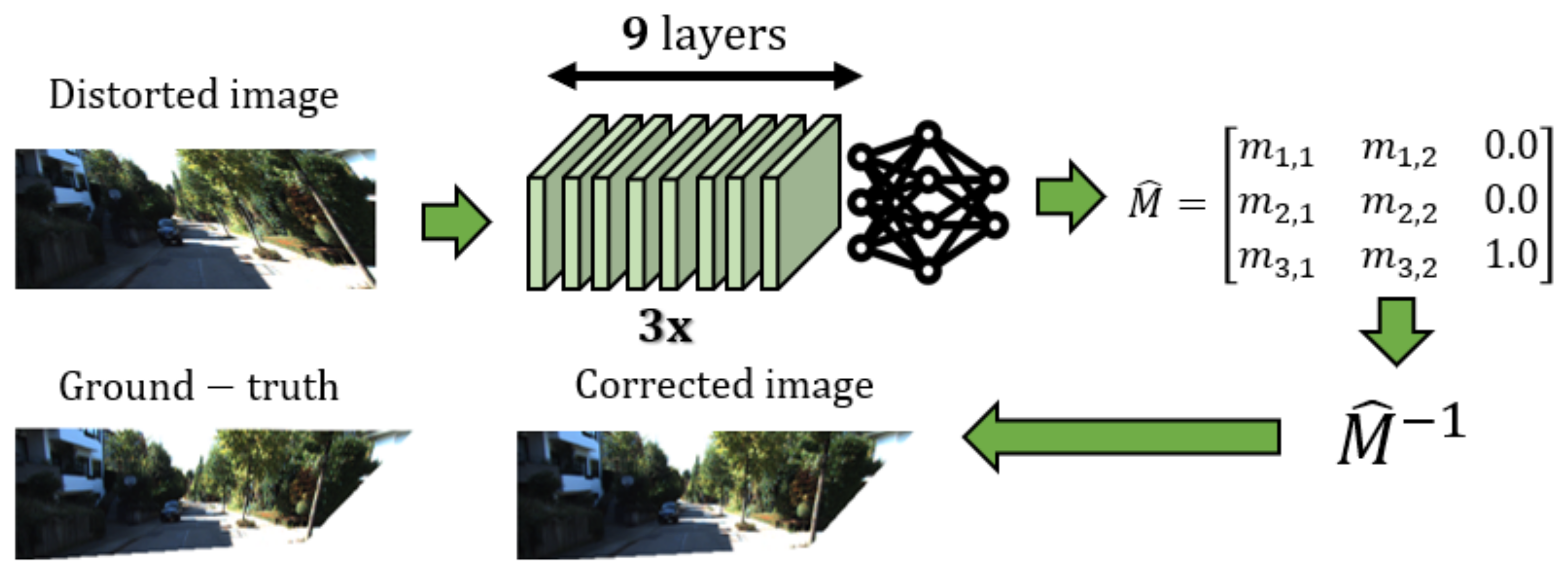

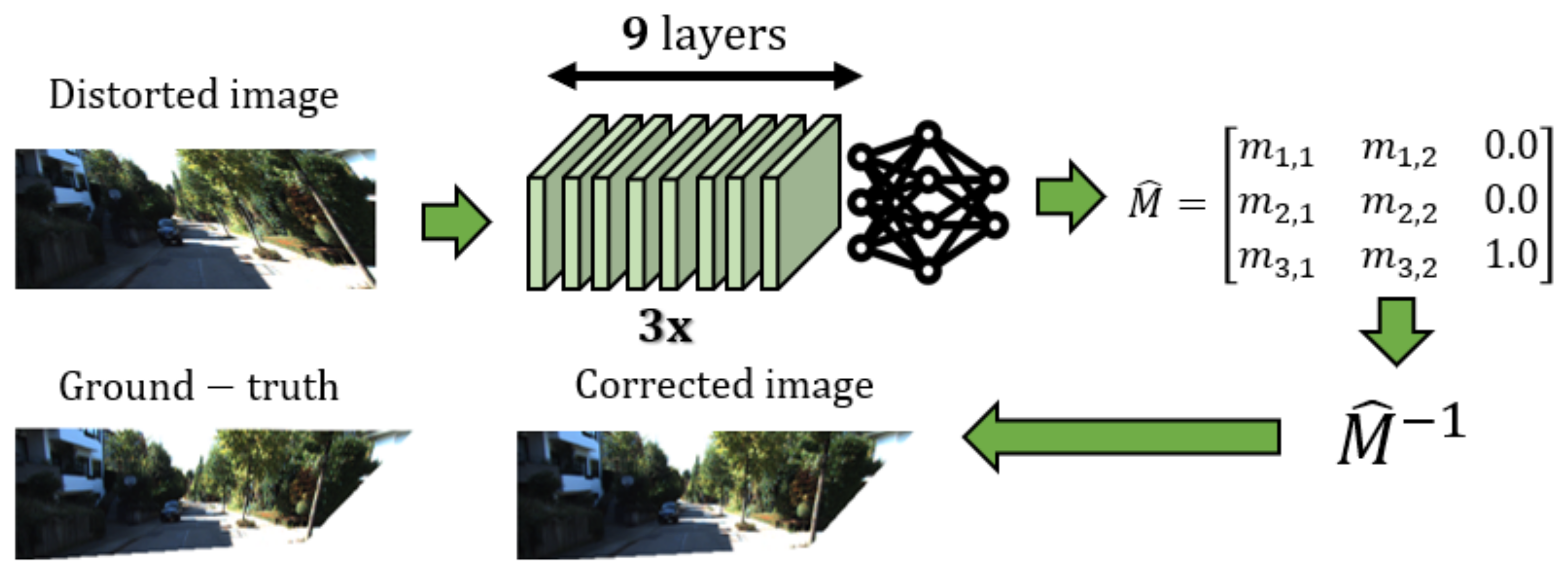

In this work, we present a network architecture with parallel convolutional neural networks (CNN) for removing perspective distortion in images. While other works generate corrected images through the use of generative adversarial networks or encoder-decoder networks, we propose a method wherein three CNNs are trained in parallel, to predict a certain element pair in the 3 × 3 transformation matrix, Mˆ . The corrected image is produced by transforming the distorted input image using Mˆ −1. The networks are trained from our generated distorted image dataset using KITTI images. Experimental results show promise in this approach, as our method is capable of correcting perspective distortions on images and outperforms other state-of-the-art methods. Our method also recovers the intended scale and proportion of the image, which is not observed in other works.

Keywords: convolutional neural networks, image warping, distortion correction, computer vision

@Article{s20174898,

AUTHOR = {Del Gallego, Neil Patrick and Ilao, Joel and Cordel, Macario},

TITLE = {Blind First-Order Perspective Distortion Correction Using Parallel Convolutional Neural Networks},

JOURNAL = {Sensors},

VOLUME = {20},

YEAR = {2020},

NUMBER = {17},

ARTICLE-NUMBER = {4898},

URL = {https://www.mdpi.com/1424-8220/20/17/4898},

ISSN = {1424-8220},

DOI = {10.3390/s20174898}

}